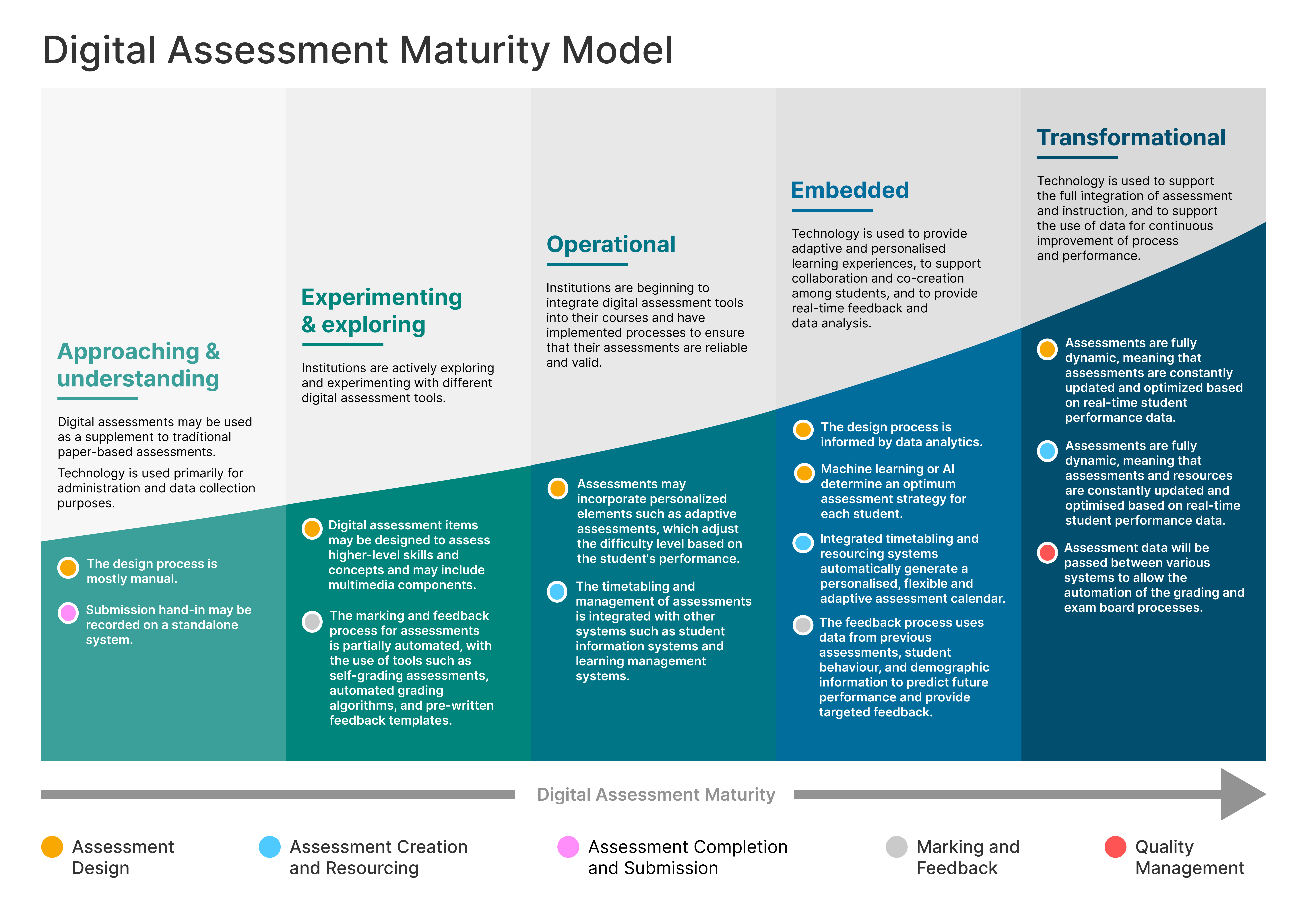

As part of our work on a digital assessment maturity model, this post contains a more in-depth look at the creation and resourcing phase.

Assessment creation and resourcing

At the level of approaching and understanding, assessments are often limited to a small number of assessment types and are manually created. The timetabling and administration of assessments is a manual, paper-based process, relying on the labour of academics and administrators. There may be a lack of consistency in process and practice, potentially leading to errors and scheduling conflicts.

When experimenting and exploring digital assessment creation and resourcing, you may begin to see the use of standardized templates and question banks. There may be some level of automation of the creation process, and the integration of multimedia or interactive elements. Spreadsheets or calendars may be used to record and plan exam and assignment timetables. There is little integration of these systems with others relating to the assessment process.

As digital assessment creation is operationalised, the creation process is more automated and may offer rule-based differentiation or personalisation of assessments, such as the use of adaptive release in VLEs. There will begin to be a level of integration between timetabling, assessment management, VLE, curriculum management, or Student Information systems. Timetabling is fully-automated but may rely on some human ‘tidying’. Assessment load and bunching can be identified and acted upon. Additional resources will be available to students to support them to develop their assessment skills, often within the assessment system itself.

When digital assessment is embedded, integrated timetabling, assessment and student information systems will automatically generate a personalised, flexible and adaptive assessment. Students may have the opportunity to choose the mode of assessment to evidence meeting learning outcomes. The creation process will use data from historical assessments, student performance and demographic data to optimise the creation and resourcing of assessment.

Digital assessment creation and resourcing may be transformational when assessments are fully dynamic. This means the modes, scheduling, and resourcing of assessments may be variable and flexible. Students may be able to complete assessments according to their own schedules, rather than follow the institution’s calendar. Timetabling may be fully automated and actively avoids bunching, and may allow personalisation by booking of assessment windows. Curriculum data from other systems may be used to automatically generate assessment points.

Read more

- Introduction

- Assessment design

- Assessment creation and resourcing

- Assessment completion and submission

- Marking and feedback

- Quality management

One reply on “Digital assessment maturity model: creation and resourcing”

[…] Assessment creation and resourcing v0.1 blog […]