When the COVID-19 pandemic hit in early 2020, it forced UK education institutions to shift teaching online. It was widely assumed that it might only be for a few weeks, but then as spring gave way to summer, it was clear that assessments would have to change markedly and quickly. Three years on, we have reached a point where we can reflect on these rapid changes in practice. Maybe you introduced a number of new software services and are struggling to embed their use, or maybe you feel you failed to capitalise on some of the benefits of digitalisation throughout your digital assessment process.

At Jisc, we have begun the process of classifying digital assessment maturity model to allow institutions the chance to assess their current level of maturity and use it to plan their next steps.

Creating the maturity model

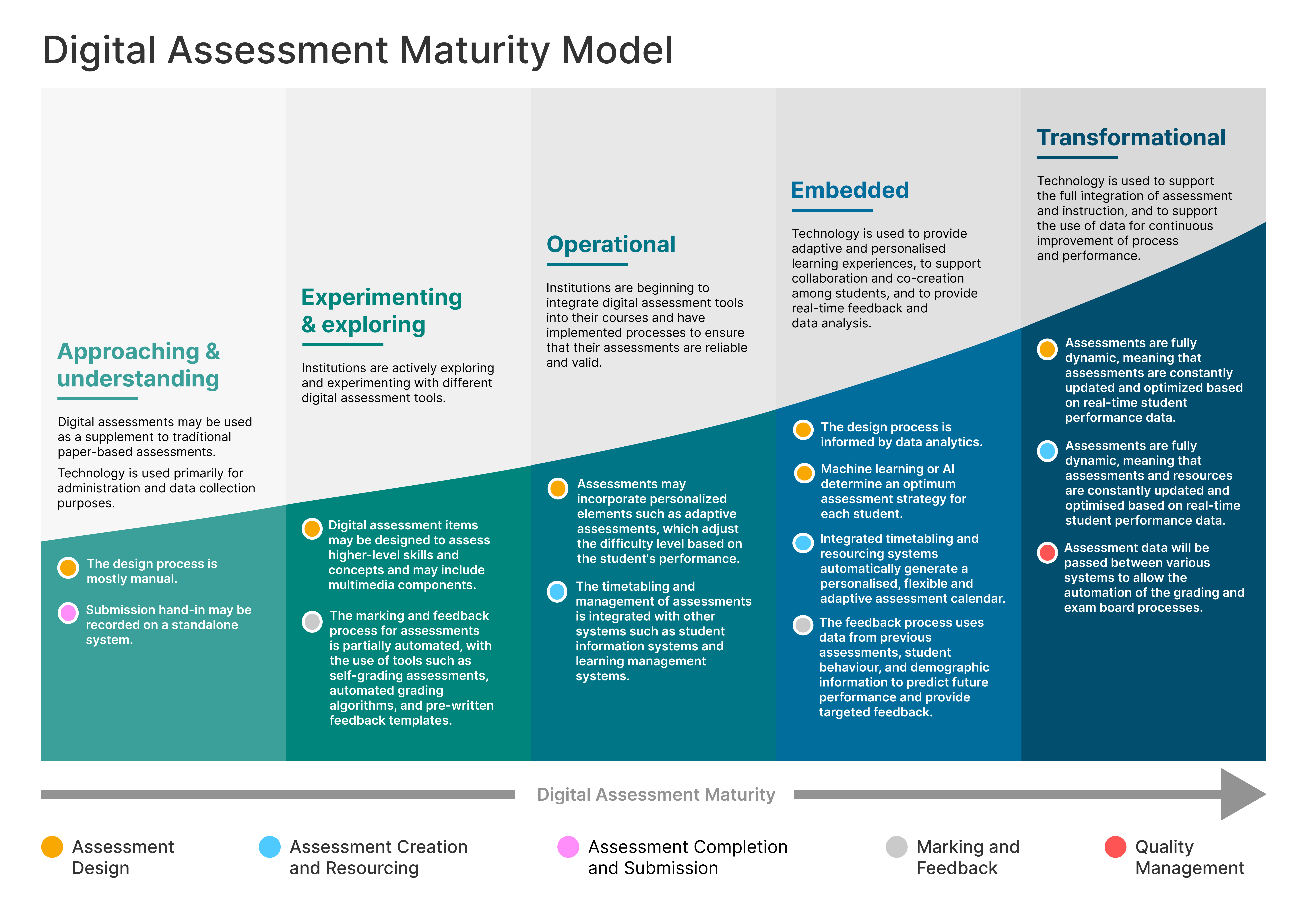

We have split the maturity model into five stages, with each stage denoting a significant shift in what you may observe. The stages are:

- Approaching and understanding,

- Experimenting and exploring,

- Operational,

- Embedded,

- Transformational.

The progression from one stage to the next is cumulative; each stage builds from the one before. Within each stage, we have attempted to identify activities from across the wide spectrum of assessment practice within an institution. This is by no means exhaustive, and we will be following up this post with a series of more in-depth blog posts on each phase of the assessment process.

The model is not intended to be a pathway you progress along, but rather a self-assessment checkpoint and to give you suggestions for the future of digital assessment in your institution. For many institutions, moving along the digital assessment maturity may not be appropriate or favourable. Indeed, achieving ‘transformational’ may not be compatible with your internal practices or strategies.

Please don’t use this as a checklist to determine your stage; use it as a holistic tool to give a potential direction of travel. Remember that Jisc has a range of experts and experience in supporting the development of digital assessment, so please reach out to your Relationship Manager for more information.

Considerations and constraints

We have purposely avoided assessment methods and methodologies, as they are often subjective and pedagogically-driven (don’t worry, we are looking at that elsewhere in Jisc). We have tried to focus on the digital assessment process and the underlying technologies as enablers.

We identified five phases of the assessment process: design, creation and resourcing, completion and submission, marking and feedback, and quality management. Although institutions may have their own unique way of approaching digital assessment, we anticipate these areas are suitable for all. However, you may find these phases may appear linearly or concurrently, and the activities related to each phase may carried out by a different teams in your institution. We will be following up this post with a series that unpacks each area in more detail, giving specific examples of some of the activities you might see for each phase.

Introducing the model

Digital assessments and the digitalisation of the processes surrounding assessment may be rare or non-existent at the approaching and understanding stage. Many activities will be paper-based and manual. Where technology is used to support assessment, it is primarily around administration or data collection. Assessments will be designed to be accessible to all students, which may include the use of digital alternatives, assistive technologies or interventions, such as amanuensis.

Institutions that are actively experimenting and exploring may be using digital assessments in small-scale trials, or looking at alternative assessment modes and methods. This may include automated knowledge-check MCQs (multiple choice questions) and formative assessment. They are beginning to understand the benefits of using technology to assess student learning, and to build the skills and knowledge required to effectively implement digital assessment tools. Institutions may be looking at BYOD (bring your own device) or other policies to ensure students have access to suitable learning technologies.

When digital assessment is operational, it may be used to enhance traditional assessment process and to add optimisations into the assessment process. Essays are submitted via a VLE, exams may have moved online, and the use of anti-plagiarism software may be commonplace. There may be processes and policies designed to ensure digital assessments are consistent, reliable and valid. Discrete systems to support assessment may start being integrated and specified in the curriculum. There is suitable infrastructure and resource to support digital assessment, including Wi-Fi, hardware, and software. Staff and students are trained and supported in the use of new technologies for assessment.

Digital assessments become fully integrated and embedded into the curriculum, when technology begins to provide adaptive and personalised learning and assessment experiences for students, and data analytics for staff use. Assessment technologies are integrated with various institutional systems, such as the VLE, student record system, and curriculum management system, to allow the creation of a holistic picture of student performance. Analytics may be used to trigger interventions for students at risk of failing. There may be purpose-designed spaces on campus for student assessment.

At the transformational stage, digital assessments are used to support and enable different forms of pedagogic practice, such as problem-based learning, competency-based education and personalised learning. Data generated by assessment is used for continuous improvement of process and performance. Institutions have fully integrated digital assessment tools into their teaching and learning. They are actively and critically exploring new technologies and approaches for assessment, and are beginning to use predictive analytics to better understand student learning.

Next steps

This work is at its very early stages, and we are looking to road test it with the sector. We have plans to work with colleagues from various universities to further refine this model, with the hope that it provides value to senior leaders, timetabling managers, IT directors and Quality Management leaders.

If you are interested in being part of this review, please contact us. If you have any comments or questions, please add them below.

2 replies on “Introducing the digital assessment maturity model”

[…] released v0.1 of the Digital Assessment Maturity Model in March 2023, and spent the rest of the month talking to […]

[…] released v0.1 of the Digital Assessment Maturity Model in March 2023, and spent the rest of the month talking to […]