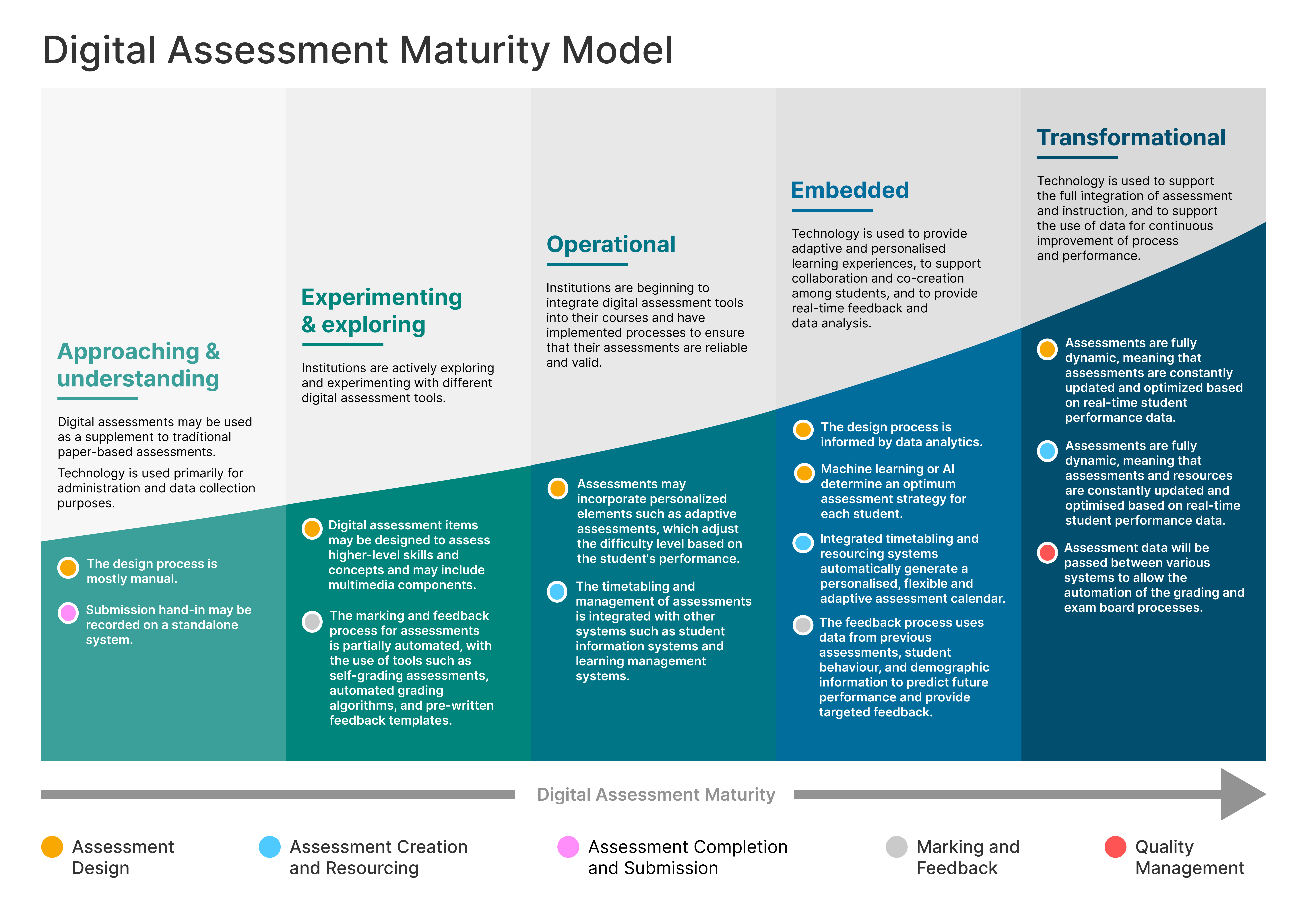

As part of our work on a digital assessment maturity model, this post contains a more in-depth look at the assessment design phase.

Marking and feedback

The marking and feedback for assessments is manual at the approaching and understanding stage. Staff will grade assignments and provide hand-written feedback to students. The feedback process is often time-consuming and may not be consistent across teachers or assessments.

At the experimenting and exploring stage, there may be introduction of some level of automation in the process. Multiple choice questions may have pre-determined grading and automated per-question or generalised feedback. Feedback begins to be delivered in digital formats. Academic staff have access to suitable equipment to support digital marking, such as two monitors or input devices.

At an operational stage, personalised, digital feedback is provided for each student. There may be some element of aggregation and analysis of marking and feedback to provide both longitudinal development for individual students, and cohort analysis. Academic staff have access to multimedia creation resources in order to provide marking and feedback in the most appropriate manner. For group assessments, it may be possible to mark and feedback based on whole-group and individual contributions within the system. Rubrics will be used, but may not be marked against digitally. To support staff, assessment content may be converted to different media types to best support their working practices or additional needs. There may be provision for second-marking, multiple markers, team marking or batch marking within cohorts.

When digital assessment is embedded, there may be the incorporation of analytics and predictive models to provide adaptive, automated feedback. Feedback may be aggregated for individual students to allow them to note patterns. Rubric use is systematic and wide-spread, with grading and associated feedback digitally captured, and shared with other systems. Group assessments may allow peer assessment or weighting. The use of standard feedback phrases may be partially automated or suggested. Second-marking, multiple markers, team marking, or batch marking may be fully automated based on pre-set criteria.

To be transformational, the marking and feedback process is dynamic and adaptive. Aggregated feedback will prompt skill development for students, and will highlight suitable resources and support opportunities automatically. The use of AI or machine learning may automate some of the marking and feedback process. Feedback may be constantly updated and optimised based on real-time student data. Academic integrity detection may encompass new technologies and methods, such AI authoring. This may include automated checks, or the ability to determine document histories or analysis of snapshots during the production of the assessment submission.

One reply on “Digital assessment maturity model: marking and feedback”

[…] Marking and feedback v0.1 blog […]